Methods

Please see our publication in Futures for more information.

Different topics have different search strategies (the keywords that we use to search for publications) and different inclusion criteria (the criteria that we use to assess publications as "relevant" or "not relevant" for inclusion in the bibliography).

Topic: Existential risk and global catastrophic risk

For the purpose of this assessment, a risk is "catastrophic" if it causes at least 10 million deaths (approximately) and a risk is "existential" if it causes the extinction of the human species or the collapse of human civilization1. Publications that are relevant to this topic do not need to include the exact phrase "existential risk" or "global catastrophic risk", but they should be about a risk that is global and catastrophic in scale.

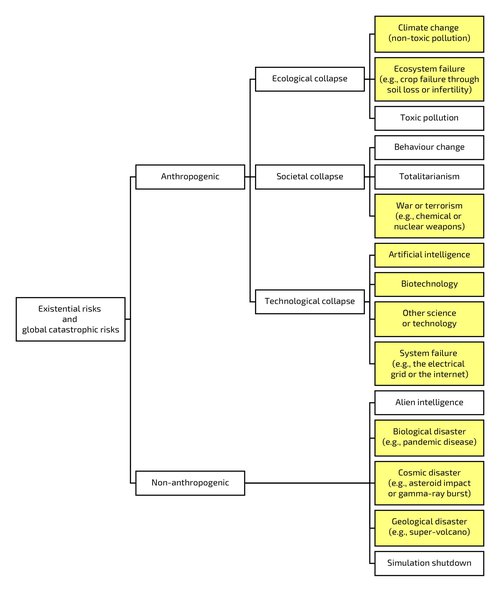

Relevant publications for this topic should be tagged as relevant not only to existential risk or global catastrophic risk in general but also to the specific risks that they discuss (if there are any). However, we are also planning to do separate searches for each specific risk, using risk-specific search terms. The risks that we are focusing on are highlighted in yellow in Figure 1, below.

Inclusion Criteria

Publications that are relevant to this topic should explicitly be about the possibility, probability, impact, or management of existential or global catastrophic risks, as opposed to other aspects of these risks that are only implicitly relevant to this topic. For example, a publication about the probability of an asteroid impact that could kill all humans should be included, whereas a publication about some other aspect of an asteroid impact (e.g., the geological evidence of an asteroid impact in the past) should not be included. A publication about climate change should be included only if it is about global catastrophic climate change. Likewise, a publication about insurance against catastrophic risk should be included only if it is about global catastrophic risk (and loss of life, as opposed to financial loss), and a publication about disaster management should be included only if it is about a global disaster (as opposed to a global response to a local or regional disaster).

Alternatively, a more common-sense criterion is to ask whether or not a publication is really about existential risk or about global catastrophic risk, rather than something that is only tangentially related to such a risk. Many publications seem to make passing reference to things that are allegedly essential to human survival without actually discussing them as such.

Relevant publications should include at least one criterion from the following list.

- Discussion of existential risk or global catastrophic risk per se (explicit, not implicit)

- Assessment of such a risk (e.g., the probability or impact of nuclear winter in the event of nuclear war)

- Discussion of a strategy for managing such a risk (e.g., strategic food reserves to mitigate the risk of human extinction from catastrophes that destroy crops)

- Comparison of these risks (e.g., the relative risk of human extinction from asteroid impact compared to artificial intelligence)

- Philosophical discussion that is relevant to these risks (e.g., the "value" of the future lives that would be saved by preventing the extinction of the human species)

Publications about artistic, fictional, or religious works should not be included.

Figure 1: Categories of Risks

These categories are defined by the cause of human extinction (or the cause of the end of human civilization as we know it). The Existential Risk Research Assessment is focused on ten of these fifteen categories (highlighted in yellow).

Notes on the Categories of Risks

Ecological collapse

Climate change (non-toxic pollution)

This category is about changes in biogeochemical cycles (e.g., the carbon cycle). These changes are not themselves toxic to humans (e.g., the carbon dioxide in the atmosphere is breathable), but the consequences of these changes (e.g., catastrophic climate change) could cause human extinction or the end of human civilization. This differentiates this category from "toxic pollution". Publications about chlorofluorocarbons and holes in the ozone layer should also be in this category.

Ecosystem failure

This category is about the loss of ecosystem services (e.g., food production) through the loss of biodiversity beyond an ecological tipping point (e.g., the loss of soil fertility). It may be difficult to differentiate this category from "climate change" (climate regulation is also an ecosystem service), but publications about climate change as a consequence of burning fossil fuels (not biodiversity loss) should not be in this category. Publications about ecosystem-service failure as a consequence of another catastrophe (e.g., asteroid impact, nuclear winter, super-volcano, or climate change) should not be in this category unless they are about biodiversity loss (not crop failure through heat, drought, or lack of sunlight).

Toxic pollution

We are not focusing on this category, but it is about human extinction or the end of human civilization through exposure to toxic pollutants (not through biodiversity loss or climate change).

Societal collapse

Behaviour change

We are not focusing on this category, but it is about the end of human civilization through behaviour change. Publications about humans deciding not to reproduce or deciding not to live in a society would be in this category.

Totalitarianism

We are not focusing on this category, but it is about the end of human civilization through totalitarianism (e.g., permanent oppression). Publications about other forms of governance failure (e.g., "the tragedy of the commons") should be in the category for the risk to which the governance failure is relevant (e.g., "climate change" or "ecosystem failure").

War or terrorism

This category is about the end of human civilization through conflict. Publications about weapons of mass destruction (e.g., biological, chemical, or nuclear weapons) or their consequences (e.g., nuclear winter) should be in this category.

Technological collapse

Artificial intelligence

Publications about superintelligence should be in this category (but publications on lethal autonomous weapons could also be in "war or terrorism").

Biotechnology

Publications about synthetic biology and genetic engineering should be in this category (e.g., CRISPR).

Other science or technology

Publications about physics accidents should be in this category (e.g., the Large Hadron Collider). Publications about geoengineering accidents should also be in this category (and possibly also in "climate change"). Publications about molecular nanotechnology should also be in this category (e.g., grey goo). Publications about transhumanism (through neuroscience) should also be in this category.

System failure

This category is about the end of human civilization through the failure of the technological systems upon which it depends (e.g., the electricity grid or the internet). Publications about the failure of food supply chains should be in this category.

Non-anthropogenic risks

Alien intelligence

We are not focusing on this category, but publications about alien superintelligence (as a counterpoint to artificial intelligence) would be in this category. Publications about non-intelligent alien life would be in "biological disaster".

Biological disaster

Publications about (natural, non-engineered) pandemic diseases (e.g. ebola, influenza, or smallpox) should be in this category.

Cosmic disaster

Publications about asteroid impacts, gamma-ray bursts, solar flares, and other cosmic phenomena should be in this category.

Geological disaster

Publications about super-volcanoes should be in this category.

Simulation shutdown

We are not focusing on this category, but publications about the end of a simulated universe (e.g., "the matrix") would be in this category.

Search Strategy

The search strategy for this topic is based on the "Global Challenges Bibliography" in Appendix 1 of Global Challenges: 12 Risks that Threaten Human Civilization2, which included publications up to 2013. We used the keywords that were reported in Appendix 1 to search the titles, abstracts, keywords, and references of publications in Scopus. We then compared our search results with the publications in Appendix 1. If a publication in Appendix 1 was not in the search results, but it was in Scopus, then we added keywords that would find this publication (unless there were no keywords that seemed specific enough to existential risk to justify their use). Using this extended set of keywords, we then searched Scopus again, and we continue to search Scopus3 regularly for new publications.

As noted in Appendix 1, the literature on specific risks (e.g., climate change) is "too voluminous to catalogue", and this is one reason that we have limited this topic to general-interest publications4. Another reason is that studying multiple risks at the same time should enable us to make comparisons between these risks, think about interactions between them, and suggest strategies for managing multiple risks.

Search Terms

Title-Abstract-Keywords: "catastrophic risk" OR "existential risk" OR "existential catastrophe" OR "global catastrophe" OR "human extinction" OR "infinite risk" OR "xrisk" OR "x-risk" OR apocalypse OR doomsday OR doom OR "extinction of human" OR "extinction of the human" OR "end of the world" OR "world's end" OR "world ending" OR "end of civilization" OR "collapse of civilization" OR "survival of civilization" OR "survival of humanity" OR "human survival" OR "survival of human" OR "survival of the human" OR "global collapse" OR "historical collapse" OR "catastrophic collapse" OR "global disaster" OR "existential threat" OR "catastrophic harm"

OR

References: "catastrophic risk" OR "existential risk" OR "existential catastrophe" OR "global catastrophe" OR "human extinction" OR "infinite risk" OR "xrisk" OR "x-risk"

Machine Learning

We are using an artificial neural network (implemented in TensorFlow5) to predict the relevance of publications that have not yet been assessed, based on the abstracts of publications that have been assessed (labelled as "relevant" or "irrelevant"). First, we exclude publications that do not have abstracts. Second, we randomly split these publications into a training set (80% of publications) and a test set (20% of publications). Third, we use the first 200 words of each abstract in the training set as the input into the neural network (200 is approximately the average number of words in these abstracts), and we use a "convolution" layer in the network to encode each of these words as a vector of numbers ("word embedding"), based on its relationship to the other words in the abstract. Fourth, we pass these word embeddings to a fully connected layer in the network. When this network is trained, we use it to predict the probability that each publication in the test set is relevant. We then generate three different models, by setting three different probability thresholds to control the trade-off between precision and recall. Please see Machine Learning for more information. We then use these models to predict the relevance of publications that have not yet been assessed by humans.

Notes

1 For a definition of global catastrophic risks and existential risks (which are a subset of global catastrophic risks), please see Bostrom, N., Ćirković, M.M. (2008). Global Catastrophic Risks. Oxford University Press, Oxford.

2 Pamlin, D., Armstrong, S., Baum, S. (2015). Global Challenges: 12 Risks that Threaten Human Civilization. Global Challenges Foundation.

3 We plan to search additional databases as soon as possible.

4 By working together, we hope that the literature on specific risks will not prove to be "too voluminous to catalogue", but we acknowledge that a catalogue would be of limited usefulness if it is too voluminous to be read (by humans).

5 For more information, please see Géron, A (2017). Hands-On Machine Learning with Scikit-Learn and TensorFlow: Concepts, Tools, and Techniques to Build Intelligent Systems. O'Reilly Media, Inc., Sebastopol, California.

Manually-curated Bibliography

Other bibliographies are to be announced for specific x-risks, e.g., artificial intelligence or asteroid impact.

ML Bibliography

Publications predicted to be relevant by our Machine Learning (ML) model, but not yet assessed by humans and thus not yet in the non-ML bibliography